October 8, 2024

What is Responsible AI - and is this achievable for my organization?

AI is beginning to change the game for affordable housing organizations. It's the innovation of our generation.

But here’s the catch: AI is only as good as the hands that wield it. Without a responsible approach, AI can backfire—amplifying biases, mishandling sensitive data, or making decisions that undermine trust & confidence.

In affordable housing, the stakes are high. Your mission is to serve communities fairly and equitably, and AI should strengthen that—not complicate it. That’s where responsible AI comes in. This isn’t about becoming an AI expert or developing tools from scratch. It’s about holding the tools and the vendors behind them accountable to the values and goals of your organization.

Let’s talk about why responsible AI matters and outline a practical framework for using AI correctly:

Why Responsible AI Matters in Affordable Housing

AI Isn’t Neutral

AI tools make decisions based on patterns in data. The problem? That data can reflect historical inequities, bias, prejudice, and discriminatory elements. If left unchecked, AI can quietly repeat - and scale - those historical patterns.

You Handle Sensitive Data

From income verification to resident histories, and from equity investor information to waterfalls, affordable housing organizations work with deeply personal and financially sensitive information. Mishandling it with AI, even unintentionally, erodes trust opens your organization to legal risk.

Your Mission Comes First

Let’s not forget why affordable housing organizations exist: to serve people, particularly those who are underserved. AI tools - and the decisions stemming from those tools - need to reflect that mission, prioritizing fairness and transparency over speed or efficiency alone.

It’s About Accountability

Stakeholders - whether they’re residents, regulators, or equity investors - expect your organization to use AI responsibly. When you can explain how your tools work, why they’re being used, and what they’re achieving, you earn trust and buy-in.

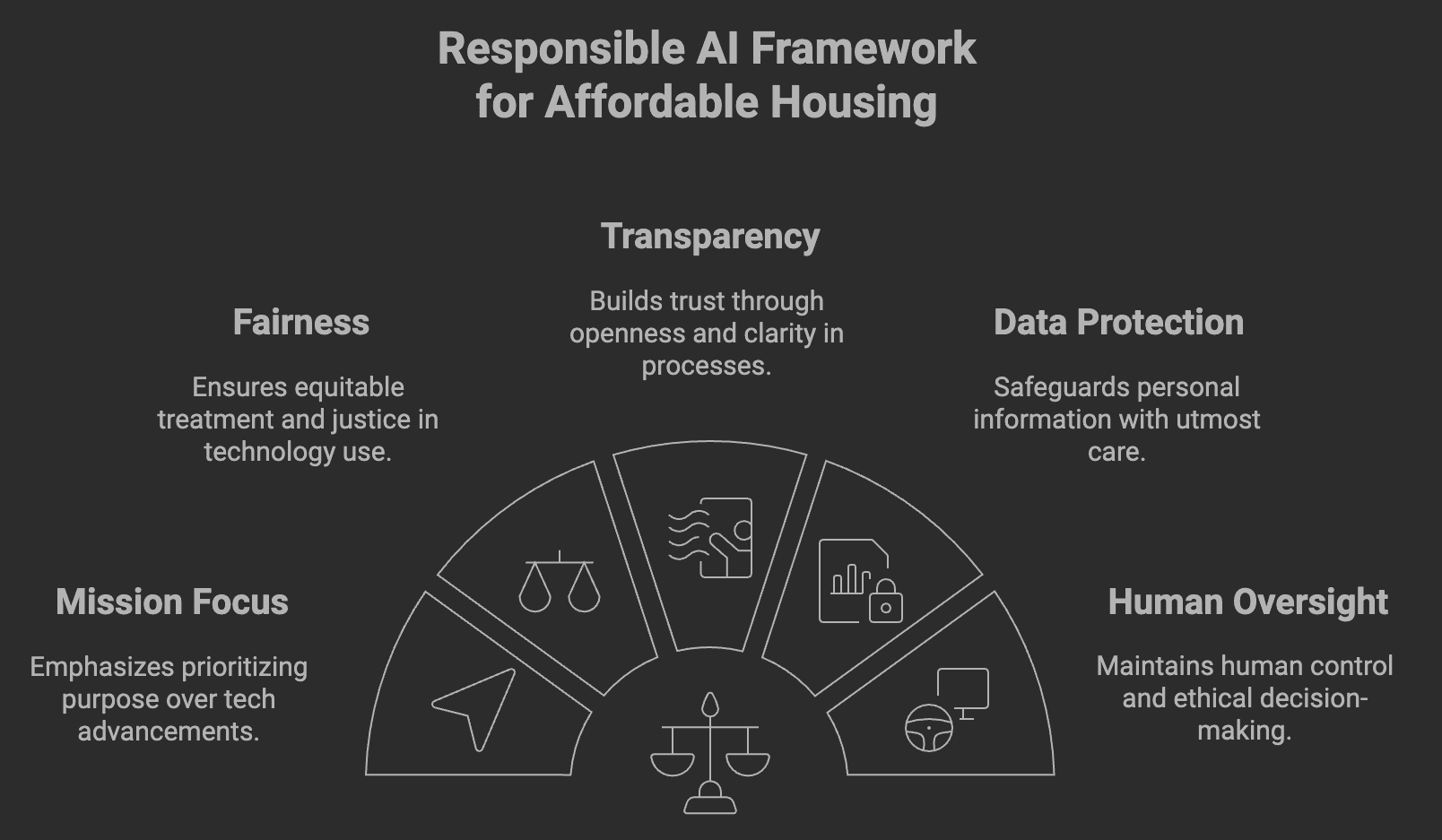

Framework for Responsible AI in Affordable Housing

Responsible AI doesn’t mean overhauling your entire tech stack or becoming an expert in machine learning. It’s about knowing what to expect from vendors, ensuring the tools you use align with your values, and maintaining oversight once they’re integrated. We think about Responsible AI across six elements:

Start With Your Mission, Not the Technology

What This Means: AI tools should serve your goals - not the other way around. Before introducing AI, define what success looks like for your organization and how the tool will support that.

How to Apply It:

Be clear about your objectives. Are you trying to improve tenant communication? Speed up application processing? Forecast repairs? Generate reporting? Process invoices? Tie every AI tool to a measurable outcome.

Push back on vendors pitching “catch-all” solutions. Focus on how their tool specifically solves your problem.

Make decisions based on value - not hype. If a tool doesn’t align with your mission, move on.

Keep Fairness at the Center

What This Means: AI must work for everyone in the manner they expect it to work. Ensure tools don’t introduce bias or create inequitable outcomes, especially in areas like leasing, resident services, or resource allocation.

How to Apply It:

Ask vendors how they test for and mitigate bias in their algorithms. If they can’t explain it, that’s a red flag.

Conduct audits of AI outputs. Look for patterns that could disadvantage certain groups, and be prepared to take corrective action.

When possible, give people the ability to challenge or appeal AI-based decisions.

Transparency Builds Trust

What This Means: Residents, staff, and stakeholders should understand how AI tools make decisions—and why. Avoid “black-box” systems that leave everyone guessing.

How to Apply It:

Work with vendors that can clearly explain how their AI works, ideally in plain language.

Provide transparency to all stakeholders.

Set up processes for your team to review and validate AI decisions. The more eyes on the process, the better.

Protect Data Like It’s Your Own

What This Means: Data is the lifeblood of AI, but it’s also a huge responsibility. Mishandling personal or sensitive data isn’t just a privacy or security risk - it’s a trust killer.

How to Apply It:

Confirm with vendors who owns the data going in & coming out of the AI tool. (Spoiler: It should always be you.)

Ask vendors about their security measures. Encryption, access controls, and compliance with regulations like GDPR or HIPAA are the minimum standard.

Limit data collection to what’s necessary. AI doesn’t need every data point under the sun to function.

Keep Humans in Charge

What This Means: AI is a tool - not a replacement for your team’s judgment. Critical decisions that impact your organization should always have human oversight.

How to Apply It:

Use AI for what it’s good at: flagging risks, identifying patterns, or automating repetitive tasks. While AI may provide quicker insights, leave the big decisions to people.

Train your team to test and interpret AI outputs critically. They should understand when to rely on AI and when to override it.

Establish clear escalation processes for decisions that need a human touch.

Monitor, Adjust, Repeat

What This Means: AI isn’t “set it and forget it.” Responsible use requires ongoing monitoring & training to ensure tools are delivering fair, accurate, and reliable results.

How to Apply It:

Regularly review key metrics, like error rates, user feedback, or satisfaction.

Audit AI tools periodically to catch any unintended issues, such as bias creeping into decision-making.

Stay in touch with vendors to understand updates or changes to the system—and how they might impact your organization.

Responsible AI is an Ongoing Practice

AI has the potential to transform affordable housing, but only if it’s used responsibly. That means holding vendors accountable, keeping your organization’s mission front and center, and maintaining oversight at every stage of the process.

Remember: AI is here to assist, not to dictate. By approaching it responsibly, you can use AI tools to strengthen your operations, improve resident outcomes, and build trust with stakeholders.

Want additional insight on Responsible AI in affordable housing? The Strategic Edge can help. Book a 15 minute call with our advisors to chat about your objectives - we'll help provide clarity about how AI strategy, AI readiness, and roadmaps align with the elements of responsible AI.